KUACC HPC (High Performance Computing) Cluster

An HPC cluster is a collection of separate servers (computers), called nodes, which are connected via a fast networking.

Koç University has been providing high-performance computing (HPC) infrastructure to KU researchers since 2004. Current system was built in 2017 by using existing network infrastructure and compute nodes. A parallel file system and service nodes were provided by IT. It started production on December 22, 2017.

HPC systems have following components:

Login Nodes

Compute Nodes

Service Nodes - Load Balancer, Headnode, NTP servers, MariaDB database

Network Infrastructure - High Speed Network (InfiniBand)

Storage - Faster, Scalable, Parallel File System (BeeGFS)

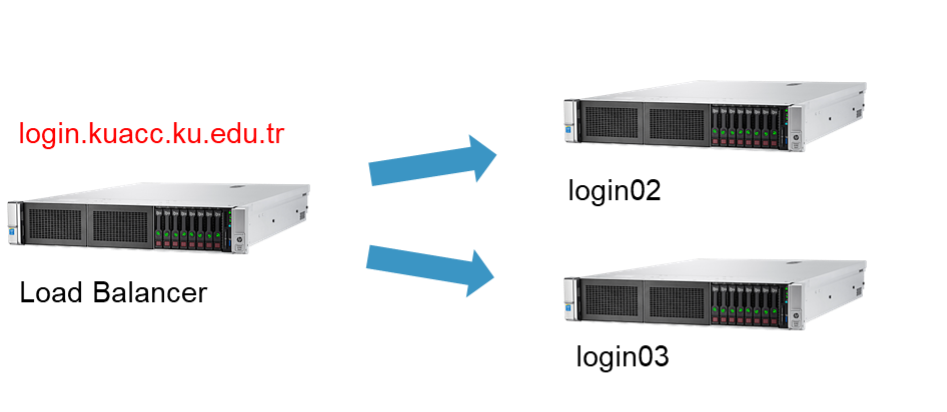

Login Nodes

Login nodes are shared with all users, so no resource intensive processes may be run on the login nodes. The purpose of a login node is to prepare to run a program (e.g., moving/editing files, compiling).

There are two login nodes on KUACC HPC Cluster. They are behind a load balancer. When user connect to login.kuacc.ku.edu.tr via ssh, connection is forwarded to one of login nodes.

Login Nodes | Specifications |

login02 | 2 x Intel(R) Xeon(R) CPU E5-2695 v4 @ 2.10GHz, 2x18cores(36cores), 16×32 (512G) RAM, 1x Quadro M2000 GPU, 1 x Tesla P4 GPU 8GB |

login03 | 2 x Intel(R) Xeon(R) CPU E5-2695 v4 @ 2.10GHz, 2x18cores(36cores), 16×32 (512G) RAM, 1x Quadro M2000 GPU, 1 x Tesla P4 GPU |

Compute Nodes

Compute nodes are designated for running programs and performing computations. Any process that uses a significant amount of computational or memory resources must be executed on a compute node. The KUACC HPC Cluster consists of 27 compute nodes, categorized into two types:

Research Group Donated Nodes

These nodes are donated by research groups, and priority rules apply.

Members of the corresponding research group have priority access to these nodes.

Interruption Rule: If a research group member submits a job to their donated nodes and insufficient resources are available:

The workload manager checks the node’s resources.

It cancels and requeues the lowest-priority running job(s) to free up resources for the higher-priority job.

The kuacc-nodes command provides detailed information about the nodes and their resources, including available CPUs, memory, GPUs, and the current status of each node.

Hostname | Specifications |

be[01-12] | 2 x Intel(R) Xeon(R) CPU E5-2640 @ 2.50GHz, 2 x 6 cores (12 cores), 512 GB RAM, 1 x Tesla K20m GPU |

da[01-04] | 2 x Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz, 2 x 20 cores (20 cores), 256 GB RAM, 1x Tesla K20m GPU |

dy02 | 2 x Intel(R) Xeon(R) CPU E5-2695 v4 @ 2.10GHz, 2 x 18 cores (36 cores), 512 GB RAM, 4x Tesla K80 GPU |

dy03 | 2 x Intel(R) Xeon(R) CPU E5-2695 v2 @ 2.10GHz, 2 x 12 cores (24 cores), 448 GB RAM, 8x Tesla K80 GPU |

ke[01-08] | 2 x Intel(R) Xeon(R) CPU E5-2695 v4 @ 2.10GHz, 2 x 18 cores (36 cores), 512 GB RAM |

sm01 | 2 x Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz, 2 x 10cores (20 cores), 64 GB RAM |

Service & Virtualization Nodes

HPC services work on Proxmox Virtual Environment management nodes. Users are not allowed to login into these nodes.

Network Infrastructure

To build a high performance computing system, servers and storages need to be connected with high-speed (high-performance) networks.

KUACC HPC Cluster uses Mellanox FDR 56Gb/s InfiniBand switch that features very high throughput and very low latency.

Parallel File System

Also known as a clustered/distributed file system. It separates data and metadata into separate services allowing HPC clients to communicate directly with the storage servers.

KUACC HPC cluster uses Beegfs Parallel File System. It has buddy mirroring which provides high availability features. KUACC HPC cluster uses Beegfs Parallel File System. It has buddy mirroring which provides high availability features.

File Storage

Inactive data in cluster is stored in slower and lower-cost storage units compared to parallel file system.

KUACC HPC cluster uses Dell & Netapp Enterprise Storage units. While NetApp storage has /datasets and /userfiles areas, Dell storage has /frozen area where archive data is stored.

There is NO BACKUP on HPC systems. Users are responsible to back up their files!